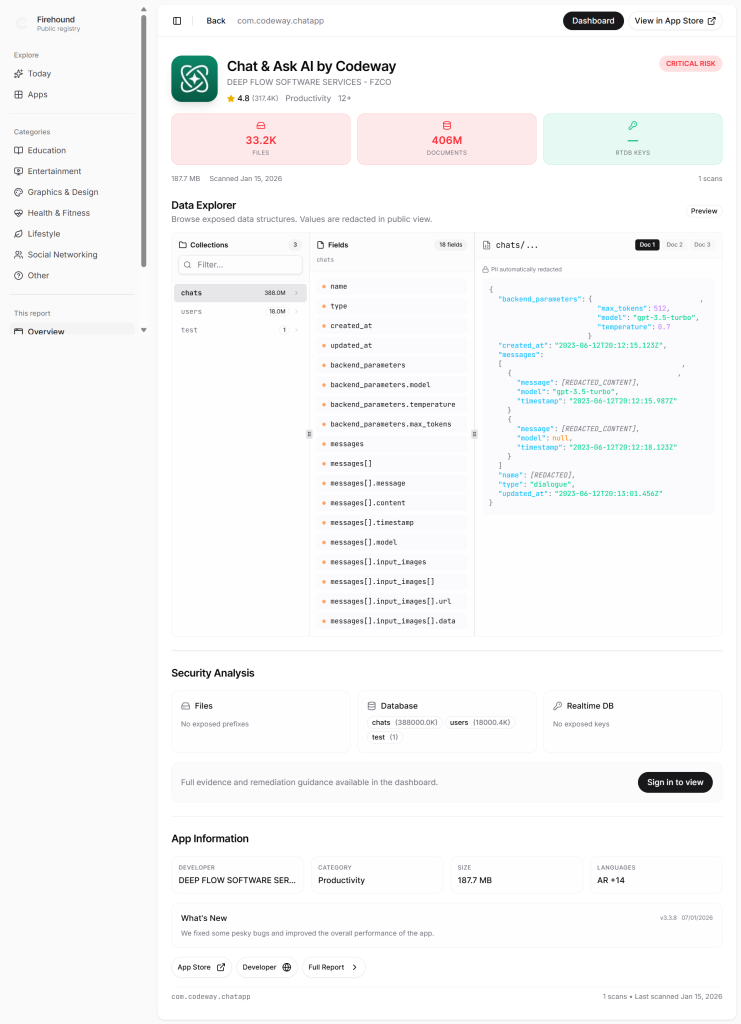

A significant security breach has jeopardized the private communications of millions following the exposure of an unprotected database. This issue was uncovered by an independent researcher and involves the release of approximately 300 million messages from more than 25 million users of the widely downloaded Chat & Ask AI app, which boasts over 50 million installations across both the Google Play and Apple App Stores.

Chat & Ask AI is operated by Codeway, a Turkish technology company established in Istanbul in 2020. The application acts as an interface for users to engage with renowned AI models, such as OpenAI’s ChatGPT, Google’s Gemini, and Anthropic’s Claude. Due to its role as a centralized access point to various systems, a single technical oversight can have considerable implications for user privacy on a global scale.

A Simple Door Left Open

This incident was not the result of a sophisticated attack; rather, it stemmed from a common technical misconfiguration known as a Firebase misconfiguration. Firebase, a Google service intended for data management within applications, had its ‘Security Rules’ mistakenly set to public, effectively leaving the digital door ajar. This misstep allowed unauthorized individuals to read and delete user data without any authentication.

The researcher, identified as Harry, reported that the compromised data included full chat histories and the personalized names assigned to AI bots by users. Additionally, the files stored sensitive communications containing alarming and personal requests, such as discussions of illegal activities and pleas for suicide assistance. Given that many users perceive these bots as private journals, this breach raises serious privacy concerns.

Not the First Time

This incident is not isolated; prior cases have demonstrated that AI chat platforms are vulnerable to data exposure. For example, OmniGPT previously experienced a breach that revealed sensitive user information, underscoring how rapidly privacy violations can escalate when AI tools operate without stringent backend security measures. Although the technical factors may differ, these occurrences expose a recurrent theme wherein traditional application security pitfalls intersect with AI services, amplifying the exposure risks associated with highly personal conversations.

Lessons for AI Users

To safeguard against similar risks, it is advisable for users to refrain from disclosing their real names or sharing sensitive documents, such as bank statements, with chatbots. Additionally, maintaining a logged-out status on social media platforms while engaging with these tools can help prevent identity association with chat interactions. Ultimately, every conversation should be treated as potentially public, necessitating extreme caution regarding shared information.

James Wickett, CEO of DryRun Security, emphasized the heightened risks when AI systems are integrated into commercial products. He asserted that the recent breach involved a known backend misconfiguration, which becomes particularly perilous given the sensitivity of the data accessed. Wickett elaborated that issues like prompt injection, data leakage, and insufficient output handling escalate beyond theoretical concerns when AI technologies are embedded into tangible products, making it imperative that applications enforce strict boundaries rather than presuming trustworthy behavior.

The Chat & Ask AI incident exemplifies a larger concern within the realm of cybersecurity, where the intersection of traditional application vulnerabilities and sensitive AI interactions is creating a critical landscape for data protection. As the technologies evolve, so too must the strategies to effectively safeguard user privacy and trust.