Artificial Intelligence & Machine Learning,

Next-Generation Technologies & Secure Development

CISA Responds to Concerns Over Director’s Use of AI Tool Amid Compliance Review

The use of ChatGPT by the acting director of the U.S. Cybersecurity and Infrastructure Security Agency (CISA) has raised alarms regarding AI governance within federal cybersecurity oversight. The incident, which took place in mid-2025, involved the uploading of sensitive “for official use only” documents into ChatGPT, drawing major concern from internal cybersecurity veterans about leadership decisions at the agency.

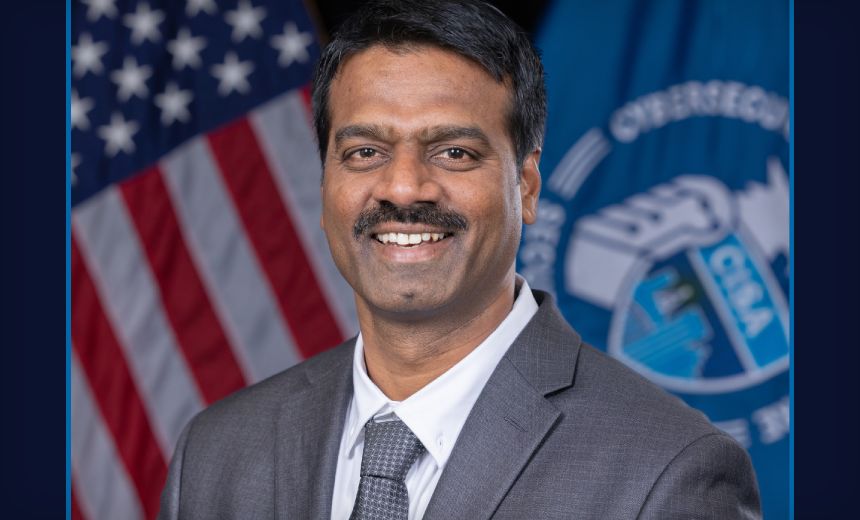

CISA Acting Director Madhu Gottumukkala’s actions, reported first by Politico, triggered notification alerts within the agency. Although the documents in question were not classified, their restriction from public disclosure raised compliance questions, which led to an internal review that has yet to be publicly concluded.

Concerns regarding Gottumukkala’s decision were articulated by former White House cyber policy director AJ Grotto, who observed that adversaries could exploit such lapses in judgment. He emphasized that while experimentation is vital, it should occur in highly controlled environments, underscoring the already significant challenges the federal government faces in defending its networks against constant cyber threats.

CISA has stated that the use of ChatGPT was sanctioned, with Director of Public Affairs Marci McCarthy noting that the use of this tool was authorized under existing Department of Homeland Security (DHS) controls. McCarthy indicated that Gottumukkala’s access was temporary and limited, reflecting the agency’s ongoing commitment to modernizing cybersecurity efforts as dictated by executive orders.

According to insiders, Gottumukkala last accessed ChatGPT under a permitted exception for specific agency personnel in mid-July 2025. Despite these measures, CISA maintains that its default security controls prohibit access to such AI tools unless explicitly authorized.

While some experts laud the capability of identifying such a use, suggesting a robust control environment, there remains a broader concern that many organizations lack oversight of their employees’ interactions with public AI tools. Andrew Gamino-Cheong, co-founder of Trustible, stated that capturing this incident highlights a level of maturity in AI governance, even as shadow AI poses ongoing challenges across sectors.

In a landscape where technology evolves swiftly, experts argue that government agencies need to accelerate the provision of secure alternatives. Darren Kimura, CEO of AI Squared, reinforced the idea that experimentation should take place in designated environments using synthetic or declassified data, rather than in unrestricted contexts that lead to unauthorized tool use.

The ChatGPT episode unfolds against a backdrop of increased scrutiny for CISA, which has been without a Senate-confirmed director for almost a year. The agency faces operational challenges, including significant turnover and leadership disruptions stemming from budgetary constraints and organizational restructuring.

As lawmakers continue to probe CISA on staffing adequacy, governance frameworks, and control mechanisms, the incident stirs urgent discussions about the implications of AI in cybersecurity, representing a critical intersection of innovation and risk management. This incident raises questions about whether current governance practices can effectively mitigate risks inherent to rapidly advancing technologies.